The Dark Side of Programming with AI

The world has changed. We programmers feel it more than anybody else. We feel threatened.

The media is full of doom and gloom articles preaching the end of the world as we know it. Preaching that programmers (and other tech people) will become obsolete: The AI jobs slaughter is coming for tech first, The highly-skilled jobs being replaced by AI, Prepare now for the jobs apocalypse – before AI leaves us all on the dole.

And I understand the reason why these articles are not far from the truth. Enormous sums of money are poured into AI so there is enormous pressure on AI to deliver. Every CEO’s wet dream is to replace fickle humans with AIs that are far cheaper and work 24/7 - just look at the recent tech layoffs trends: Klarna CEO says AI ‘can already do all of the jobs’, AI Writes Over 25% Of Code At Google, Tech sector layoffs mount amid AI investment frenzy.

The question is what can we do? Do we just give up or ignore the threat? Whatever happens, happens? Not sure about you, but I’m not going to just give up without a fight.

In the 2024 presidential elections in Romania, one of the candidates came out of nowhere, almost nobody heard about him but he still managed to win the first round of elections. He managed to do this by manipulating social media algorithms that pictured him as a Godsend.

But once people started to learn more about him they began to understand who he really was. His main economic pitch is that the horse industry will save Romania’s economy, he believes Pepsi cans contain nanobots, he says there is no Coronavirus because nobody saw it, and that Dacia is the real cradle of Earth’s civilization.

This guy reminded me a lot of AI. A Godsend that promises to change our civilization but that is hallucinating a lot.

To fight something you need to understand it. You need to know what it can do and what it cannot do. You need to know its strong points and most importantly, you need to know its weaknesses. You cannot fight the unknown but you can fight a clear and present danger.

So this is my fight plan:

- learn all I can about AI (to be more specific I mean LLMs),

- use it as much as possible to study it

- make the AI work for me instead of against me

- find ways to keep my relevance in front of AI

The problem is using AI in programming has some dark side effects. We need to be aware of these effects and of the ways to cope with them. Of course, the list is still open, as the AI progresses and we learn more about it, we might need to change tactics.

Your own personal Jesus or clumsy assistant?

How you view the AI matters a lot. Your mindset when using the AI is crucial.

There are people who take everything the AI produces as gospel. They don’t question anything. For these people, the AI is like a Personal Jesus. Their own Personal Jesus. These people are zealots, fanatics, bigots.

Believing with religious fervor in AI is not the correct mentality.

AIs are trained on data, lots and lots of data. They learn from this data and they provide answers only based on the data they are trained on. AIs used for programming are trained on lots and lots of code repositories. Guess what, not all those repos have good code. Thus the code produced by AIs will not always be good.

Sometimes it even comes up with crazy things that don’t make any sense. These are called hallucinations. Sometimes you can spot these hallucinations but sometimes you can’t! Without using your brain to question if the AI answer is good or bad you’ll never be able to detect these hallucinations.

A much better way to look at AI is to think of it as an intelligent but clumsy assistant. A sidekick. Think Princess Leia and C-3PO, Sherlock Holmes and Dr Watson, Batman and Robin. You are Princess Leia or Sherlock or Batman and the AI is C-3PO, Dr Watson or Robin. You are in control! The final decision is yours! You need to be the main character in the story!

You should view it as an assistant, as an entity that can produce good things most of the time, but not always! You can ask it for help, to explain some code, or to come up with suggestions, etc. You delegate simple tasks, boilerplate code, and so on.

But don’t delegate thinking.

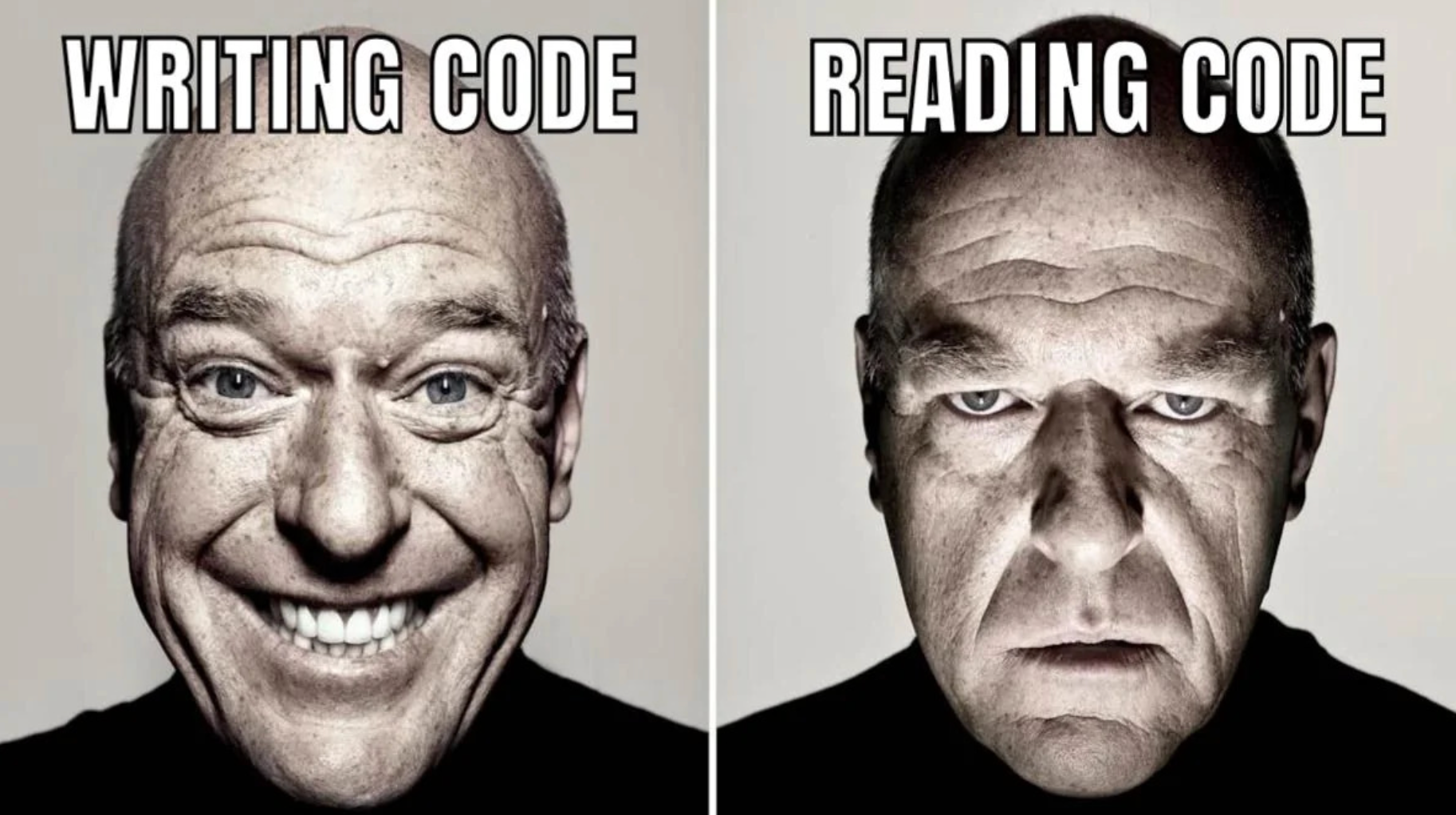

Writing code vs Reading code

Do you like to read code? Reading code is what we do most of the time even now, but at least we still write some code.

Do you like to review code? The reality is that reviewing code is boring. Not much fun. It’s hard to do a good code review.

That’s what you do all day when AI writes the code, you just review what it produces. And this is in case you are realistic and understand that AI can produce bad code: sometimes the logic AI produces is flawed, wrong, or inefficient and you need to recognize it.

No more code writing, just code reading.

Where is the fun now? Where is the pride? Where is the craftsmanship? How do you grow? How do you become a better programmer?

Sadly this is the the shape of the things to come: we programmers will just review AI-generated code. That is until another AI takes this job too.

Addicted to AI

‘Brain rot’ named Oxford Word of the Year 2024. I cannot think of anything more appropriate.

With the right mindset and if you are careful to not delegate too much, programming with AI can be fun. E.g. autocomplete a function body, generate a test for a function, learn how to use a 3rd party function. You are still Princess Leia or Sherklock or Batman.

But over time you’ll trust it more and more. You will delegate more and more.

Over time you will not be able to program without AI anymore. It will be like you’re missing a hand or half of your brain.

As the AI-generated code grows, so will the bugs. How will you be able to debug anything with half of your brain? How will you be able to fix code you don’t understand?

When you’re finally accepted to a job interview, you will not be able to articulate any coherent answer because the answer will not be in your brain, your brain will instinctively look for AI to provide the answer. But the AI will not be there.

Brain rot

Matter of fact it’s all dark

As a result, no one on Earth fully understands the inner workings of LLMs. Researchers are working to gain a better understanding, but this is a slow process that will take years—perhaps decades—to complete. - Timothy B. Lee and Sean Trott

I find it mind-boggling that we are willing to rewrite our code with a technology we don’t fully understand. Who will be responsible when AI-generated code is hacked, causes an airplane to crash, or triggers a nuclear weapon?

Even more disturbing is the fact that AI corporations are trying to create AGI with the arrogance that they could control such a beast. No moral or ethical dilemmas when you need to “move fast and break things.”

And everyone chooses to ignore the ever-increasing energy required to create new AIs. Like the climate changes are not bad enough, we throw more fuel on the fire.

I have the feeling our goal is create the world of WALL-E: an uninhabitable, deserted Earth, on which cute intelligent robots are left to clean up garbage.

We have become comfortably numb.